A Bodysuit, a Jacket, and a Pair of Stilettos. Part II

Last time we found out: one can translate stylist knowledge into machine language; the neuron network can tell a sweater from socks; the easiest way to visualise clothes is to assemble them from template parts, like Lego. All we had to do was to put it all together and share with the masses.

Minimum Viable Product

We settled for a mobile app as the most suitable form for a virtual stylist. Its basic configuration would be already more impressive than any market offerings at the time. But would it take off – that was the question. Would people entrust their appearance to a soulless algorithm? Would they consent to share photos of belongings?

We surveyed the target audience, got a green light, and defined scenarios in which the service would be theoretically useful. To understand what would be actually demanded, we decided before developing the MVP itself to create the beta version of its beta version and run it on a test group of fifty women aged 16-50.

Pre-MVP or Proof of Concept II

For our stylish AI to see and be seen, we had to refine and scale it:

- to expand the knowledge model (add missing clothing items and attributes);

- to extend the list of occasions (granny’s birthday, public speaking, going to the cinema, woodland walks, etc.);

- to update the rules following the new introductory;

- to recalibrate the recommendation system for new requirements.

The virtual stylist would settle in an iOS app for a start.

For the next two and a half months, we plunged headlong into the realm of Dolce & Gabbana work and described most of the existing wardrobe, except for accessories and winter clothes, and doubled up the number of the attributes (down to the fastener type). The number of rules had increased 15 times, exceeding 70 thousand. The virtual stylist turned into a digital Goliath, and only algorithmic optimisation could regain control of it.

To grasp the magnitude of the challenge, let us return to the system’s design.

What’s Under the Bonnet

The machine does not dress up a person. The machine optimally fills 6-8 slots [body parts] with elements [clothes] within a given style and considers the user’s features. Each slot has its own attributes – the user and clothes’ parameters.

Ideally, all the attributes should be determined by their own neuron networks by their picture, but being short on time, we had not touched image recognition algorithms since PoC. Roughly speaking, the algorithm could distinguish pants from a jacket and then jeans from trousers; but details were still to be entered manually.

Filling the slots was subject to prescriptive and prohibitive rules. Each combination of attributes and a complete look received a score

Thus, we have managed to translate informal knowledge into machine language and to create a system that is universal, scalable, and adjustable to a specific person.

However, the system went through an immense number of combinations, while we aimed at real-time recommendations and query processing under five seconds. To prevent a combinatorial explosion and meet the time frame, we used the branch and bound method, and in general, it got the job done, but:

- The algorithm reduced the number of combinations by selecting the optimal ones sequentially, filling slot by slot, and filtering options out at each step. Sometimes combinations selected at intermediary iterations of the algorithm did not give the optimal result at the end. You can assemble the perfect suit only to find out there are no matching shoes.

- The algorithm reduced the query processing time to several seconds at the current load. But it won’t be enough in the future when we extend the wardrobe again. Either the speed of response or the quality of recommendations will suffer. Neither users nor we need this.

Therefore, for the future, we considered other methods of machine learning. Our task was reduced to regression analysis:

- there is a set of objects – complete outfits and combinations of individual clothes;

- each clothing item has a set of attributes;

- each combination or an outfit is evaluated by an expert (and later on by users) in the range of 0-10.

We needed a machine learning model that could be trained on expert assessments and periodically retrained on new data (user feedback, latest trends, etc.) to predict the rating of a new look or clothes combination.

We considered the Naive Bayes classifiers, support vector machines, decision trees, etc., but stopped on factorisation machines, since they are best suited for sparse matrices. Each garment has its own individual attributes: the shape of the heel of the shoe, the type of collar and sleeve of the blouse, the fit of the trousers, and the strap of the bag. Therefore, our matrix of objects and attributes would be very sparse – for each object, only a small part of the total number of attributes is specified.

Besides, we got to work closely with factorisation machines for our primary product – optimisation of online advertising and forecasting ad impressions and conversions. FMs help us predict clicks with high accuracy, even though ad serving requests often lack many parameters. Different domains turned out to be very similar in terms of model training and sparseness.

We analysed FM based libraries and found the two most common: libFM and xLearn. After reading numerous reviews and playing with each of them independently, we decided to stop at xLearn, because it:

- has a more convenient API with phases of training and prediction separated;

- is faster than libFM, according to the graphs on the project’s main page;

- and, we’ll admit, it has a better rate on GitHub.

So, we figured out how to improve the algorithm and the library, made notes, and saved them in the backlog since the transition to the new algorithm, unfortunately, did not fit the terms and budget of the current phase.

What is There Around the Bonnet

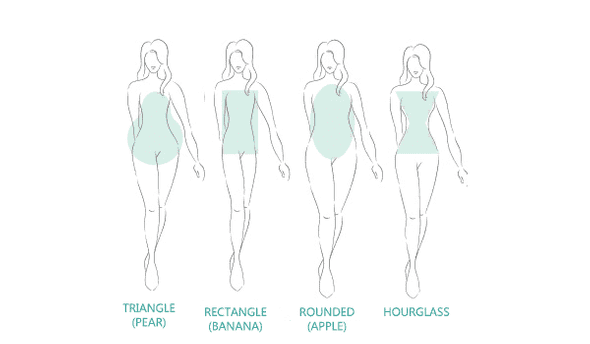

In a month and a half, we came up with, agreed with the partner, and developed the iOS app from scratch. The user had to upload their photo to a personal account, indicate body type and age.

To add clothes to a virtual wardrobe, the photo with a neutral back was enough. As soon as photos were uploaded, we had 12 hours to fill in all the missing attributes and determine the user’s type.

The neuron network sorted the uploaded clothes: shirt to shirts, dress to dresses. All spit and polish. If only it worked IRL: one just throws the piece in the direction of the closet, and it finds its place on its own.

An important objective was to make recommendations as personalised as possible. To do this, we added Like, Dislike, and Favourites buttons. The user could rate the proposed look, save it to Favourites, leave a comment, pin a specific clothing item or the whole category of clothes as a must-be in the outfit. The self-learning mechanism tuned recommendations to personal preferences based on such feedback, and could also regularly supplement and update the knowledge base following trends.

Along with a personal wardrobe, there was also a shop imitation with 1000 items in the app. The system suggested how to diversify looks and visualised shopping items combined with personal clothes.

At the end of this iteration, we had an advanced recommendation system. Unlike the majority of such services, it did not just copy outfits from pictures but created personalised looks out of a person’s real clothes. The system considered a detailed description of clothing pieces, user’s parameters, and external factors such as the given style, the event type, season, and all previous user ratings and wishes. You could upload information about yourself, your wardrobe, click on the “Select an Outfit” button, and get all kinds of outfits in the form of a collage of clothes in 5-8 seconds.

The app helped to get the most out of the existing wardrobe and suggested the most suitable clothes from the shop when needed. The system recommendations became comparable to a professional stylist’s work in terms of adequacy, complexity, and aesthetics.

It was time to try the product in action.

Field Test

We launched the app on TestFlight and sent out the invitations and instructions to the first part of the focus group. The app worked on all iPhone models, starting from the iPhone 5. Users started uploading pictures, some one or two, some fifty at once. They requested outfits, left likes, dislikes, and comments – things got on track. All in all, about 80 people tested the application in a month.

After a month, we asked each user whether the app was useful, how often they would use it, and what difficulties and shortcomings they encountered.

The main challenge happened to be finding time to take pictures of and upload an entire wardrobe. As a result, about 2/3 of the focus group uploaded either under a dozen clothes or none at all, played with a test shop, and soon neglected it as well. But this is the app task specifics: to work through the wardrobe, you need its owner to participate.

Live professional analysis takes several hours and costs a pretty penny. We simplified this stage to two operations – photograph a thing and upload a photo to the app, but we were not able to get rid of it entirely.

Still, the UX had to be improved. Even with a separate step-by-step instruction, users struggled to figure out what to do without in-app tooltips. For example, whether you should photograph clothes while wearing them or separately.

On several devices, uploaded photos had been disappearing as persistently as the bug to blame for this had been eluding us. And sometimes the Muse popped by, so that the recommendation system sneaked in experimental outfits, suitable for podiums and spotlight worthy, but questionable for day-to-day life.

We also messed up the organisation: testing started in October-November, while the system was designed for the spring-summer season. Because of that, the particular wardrobe section ended up out of business. Especially shoes. Especially sandals.

Nevertheless, the dominant response was positive, including those’ who had uploaded just a few photos or none and enjoyed demo shop based recommendations. The general impression was that the app was useful and handy, gave new ideas, or helped find The Bag.

Users really fancied the demo shop. Initially, we believed that personalisation would be our competitive advantage: algorithms create a look out of user’s clothes tailored to a particular occasion and personal preferences. But being able to simultaneously check whether the candidate for a place in your wardrobe fitted turned out to be not a cherry on the cake, but one of the main courses.

The shop could help monetise the application. Therefore, we once again contemplated the format. What is more needed: a virtual stylist for a personal wardrobe or a smart shopping function, a separate app or an option integrated into an online store? What would you choose?

Investor Targeting

The development of the finished product looked promising. The recommendation system gave recommendations; the application was applied; users used it. The dream app was just a winter wardrobe and basic accessories, renewed algorithms optimisation to scale the knowledge base easily, and an improved interface away from us.

We also intended to make an outfit calendar and the tag system for easy planning and navigation. There was room for extra features as well: garment construction, full-fledged photo-based 3D-modeling of the user and clothes, trying on hairstyles and makeup, social network elements – the solution had high potential.

It was just a matter of money. The full version would cost several hundred thousand dollars, which turned up to one mil if we take into account marketing and promotion expenses.

We have experience in raising funds. We promoted both our core ad-tech products and projects we implemented as an outsourced team. If you are interested, we can talk about these inner workings in a separate article. But this time we were an executive side, so we didn’t know any particular details.

The partner’s trustees found interested people, had already planned negotiations with major online shops, but something went south. It seemed most of the work had already been done, a full-scale launch was already looming on the horizon, but at a general meeting, the project was put on hold.

To this day, blue, yet fashionable programmers have been wandering around the office. Forced to bear the burden of fashion and exquisite style, forlorn, they hope for the story to continue.