How programmers have been conquering the world of reasonably high fashion

Let’s get acquainted. We are Inventale, an ad-tech company. We specialise in Data Mining, Big Data, and artificial intelligence. Our primary product helps predict and optimise advertising campaign delivery for large publishers’ account managers, freeing their heads from countless formulas, and desktops from mammoth spreadsheets. But our expertise is not limited to online advertising.

For example, a couple of years ago, we developed a system predicting a film or series success at the planning stage. Forecasting was a familiar task for us. Besides, we fancy the cinema. But this time, we want to talk about a project that made us dive into the unknown. The partner suggested – what if we create a personal pocket stylist?

A Little Review on the Digital Fashion Market

Fashionistas can pick up a digital outfit mainly in two ways, “fitting room” and search by picture.

Fitting rooms are a choice of branded online shops. The model is photographed in the entire shop’s assortment, and then the user dresses them up like this:

In the second case, services use visual search to compile an outfit from a picture or video from all available online shops. Examples are Amazon Styleshop, Pinterest Lens, Asos Style Match, and Screenshop. The Russian market isn’t shy either: Sarafan Technology launched a similar service for Instagram back in 2017, and Yandex gave full access to Sloy with AR in the autumn of 2019.

Among the non-standard ways: Amazon’s hands-free camera Echo Look will choose one of your two outfits by criteria that only it understands.

None of these tools can substitute a stylist, though. They create a look in a void and take into account neither your existing wardrobe nor your appearance. Echo Look is an exception, but you might just send a photo to a friend whose taste you trust. An inspiration before shopping? Yes. But hardly a stylist.

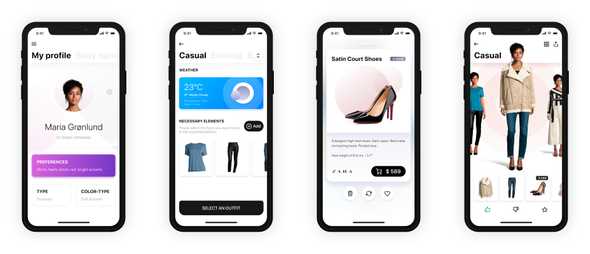

So, there are definite gaps in the digital fashion world. To fill those, we decided to develop an app that would compose an outfit out of user’s clothes, taking into account the given style, occasion, weather, Saturn’s position, etc.

And most importantly, it would consider the user’s physical characteristics such as body shape, personal colour analysis, body type, age, thus creating truly personalised outfits. And it would also find a piece missing from the flawless look in an online shop and visualise everything on the user’s virtual model.

The technical specification looked ambitious, like a real personal stylist, only within a smartphone. The path to the dream application ran through several short iterations.

Proof of Concept

We were to determine whether it was possible to transfer the stylist’s knowledge and experience to machine language, to begin with. Spoiler alert – it was. In general, the process looked as follows:

- Lock the stylist and the analyst in one room.

- Wait.

- Get the knowledge base and digitise it.

- Check the quality of looks the system offers.

- Repeat until it works out well.

The third point from the list splits into two stages. First, we described a person and each clothing element with a set of attributes: age, body type, fabric, colour, texture, shape, type of neckline, sleeves, etc. Then we set the rules for combining them. A rule-based neural network processed the data.

Alisa Zhigalina, a professional stylist from Moscow and co-founder of Izum fashion school, was the one who shared her knowledge and experience with us. Before becoming our expert, she would collaborate with The First Channel’s fashion show on Russian TV, with the CTC Media, with the Novikov group, and with Carlo Pazolini.

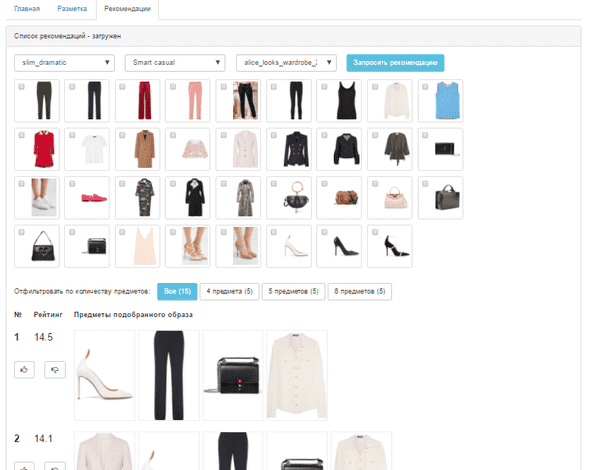

We tested the hypothesis on the abridged spring and summer capsule women’s wardrobe: no accessories, no frills, only five styles, and a minimum set of attributes. First, we described two test wardrobes with 800-1000 items each, and with a knowledge management model of more than five thousand rules.

No one on Earth (except us) would need a virtual stylist for a fictional wardrobe. It’s only of use when it introduces proper order to real users’ wardrobes. So we needed to digitise a personal closet somehow, as well.

The phone’s camera was meant to become the virtual stylist’s eyes. It’s hard to find something more suitable for a visual service than a photo. At least that’s what we thought. So that the system could recognise clothes by picture, we equipped it with AI. Then we trained it on skirts and all sorts of pants: jeans, trousers, boyfriends, and whatnot.

Over three weeks, the AI had managed to recognise clothes reasonably well, which greatly facilitated our work. At first, to check the recommendations adequacy, we spent a tremendous amount of time on manually putting attributes from A to Z to each test wardrobe item. From then on, our role was reduced to clarifying little details: the texture of the fabric, decor, collar type (there are dozens of them, can you imagine?!)

Anyway, the time has come to test the system in practice. Its firstborn was this minimalistic avant-garde evening attire:

Now, picture five serious bearded guys passionately discussing whether bodysuit without a bottom is chic-n-class or shame-n-regret (write your opinion in the comments) during the working hours instead of programming. But whatever they would decide, it was the expert who had the final say. From that moment on, the iterative process went as follows: “the stylist’s giggling — rules refinement — recommendation testing.”

Although the system’s creative urge was not always unanimously welcomed, the recommendations generating approach turned out to be quite viable. As for clothing recognition, the results were entirely predictable:

- The photograph quality and the framing of the clothing piece was critical.

-

AI recognised most of the attributes pretty well, but the user would still have to clarify some of them (for example, the fabric texture).

In the interim between discussing the new Balenciaga collection and selecting cool clothes for a test wardrobe, we had to think about visualisation. We generated about a dozen options – from a simple collage to a realistic 3D model with textures. But the latter was expensive, dreary, and “sometime in the next iteration.” So our final choice was 2D-visualisation.

And even with 2D, the scope of work was tremendous. There were above ten thousand common variations of basic wardrobe clothes, we estimated. So, instead of drawing, let’s say, many different blouses, we combined one top with different sleeves and collars like a Lego kit. Those blouses became our guinea pig; we drew all the possible variations, applied textures of about twenty fabrics, and tried each of them on the default model.

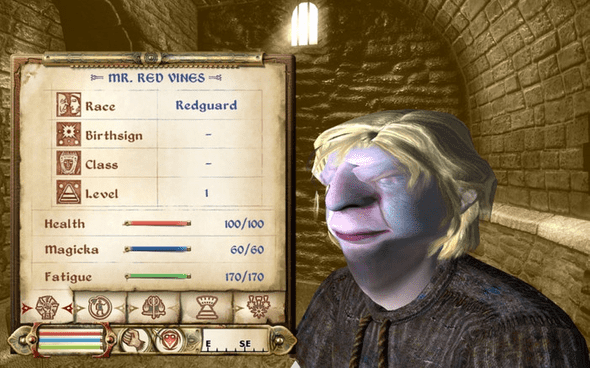

We considered using a user’s photo to make the virtual model realistic, but it became clear very soon that the picture should fit the template ideally. It’s not that we doubted someone’s selfie skills, but chances to successfully compete with the artistic expression of “The Elder Scrolls IV: Oblivion” character editor were high.

Gradually, we reached a point where everyone involved knew what body part a sweatshirt was for and could tell culottes from boyfriend jeans apart just a little worse than the neural network. And both the expert and all the girls at our office were happy with the system’s recommendations. It was then just a matter of solemnly demonstrating the results of PoC to the partner and getting a verdict: the idea was feasible, the approach was promising, let’s move on. Which happened indeed.